Perceptually Based Depth-Ordering Enhancement

for Direct Volume Rendering

Lin Zheng1

Yingcai Wu2

Kwan-Liu Ma1

This project was conducted when Yingcai Wu worked in UC Davis.

1University of California, Davis

2Microsoft Research Asia

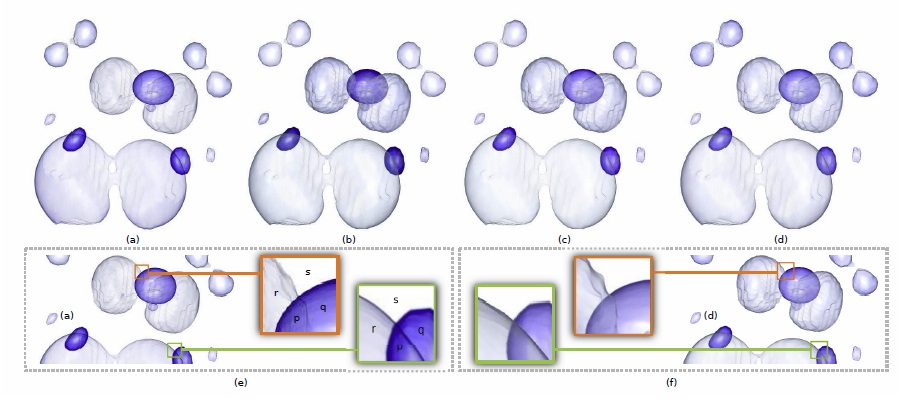

Visualizing complex volume data usually renders selected parts of the volume semi-transparently to see inner structures of the volume or provide a context. This presents a challenge for volume rendering methods to produce images with unambiguous depthordering perception. Existing methods use visual cues such as halos and shadows to enhance depth perception. Along with other limitations, these methods introduce redundant information and require additional overhead. This paper presents a new approach to enhancing depth-ordering perception of volume rendered images without using additional visual cues. We set up an energy function based on quantitative perception models to measure the quality of the images in terms of the effectiveness of depth-ordering and transparency perception as well as the faithfulness of the information revealed. Guided by the function, we use a conjugate gradient method to iteratively and judiciously enhance the results. Our method can complement existing systems for enhancing volume rendering results. The experimental results demonstrate the usefulness and effectiveness of our approach.

@article {YWu2013b,

author = {Lin Zheng and Yingcai Wu and Kwan-Liu Ma},

title = {Perceptually Based Depth-Ordering Enhancement for Direct Volume Rendering,

journal = {IEEE Transactions on Visualization and Computer Graphics,

year = {2013},

volume = {19},

number = {3},

pages = {446--459}

}

This research was supported in part by the HP Labs and U.S. National Science Foundation through grants CCF-0808896, CNS-0716691, CCF 0811422, CCF 0938114, and CCF-1025269.